Paper Analysis: FramePack - Enabling Longer Video Generation

1. Key Achievement: Generating Longer, High-Quality Videos

A primary achievement of the research presented in "Packing Input Frame Context..." is enabling next-frame prediction diffusion models to generate significantly longer video sequences than previously practical. This advancement is coupled with the ability to effectively maintain visual quality and temporal coherence throughout the extended duration, mitigating the common problems of quality degradation and content inconsistency that plague autoregressive models when generating lengthy videos.

2. Reasons and Technical Solutions for Achieving Longer Videos

The ability of FramePack to generate longer videos stems from its direct approach to tackling the two fundamental bottlenecks limiting the length of autoregressive generation: the computational/memory bottleneck (forgetting) and the error accumulation bottleneck (drifting).

-

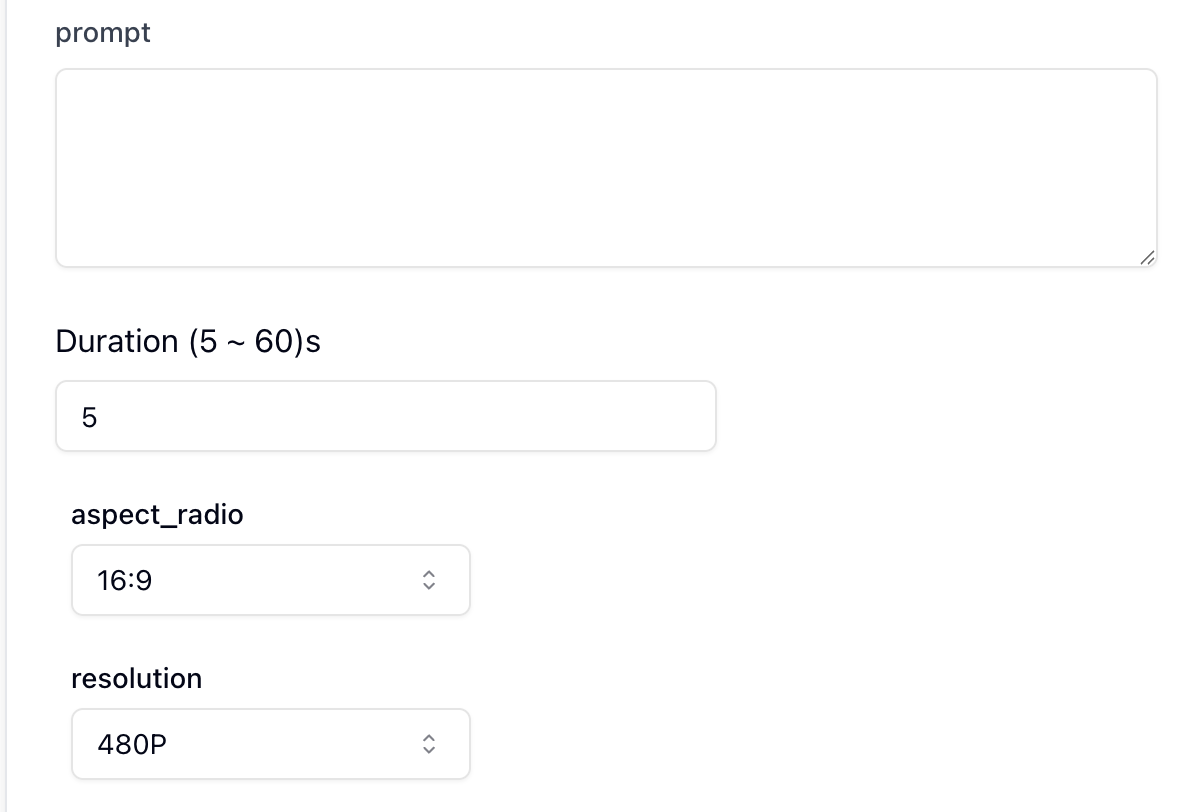

Addressing the Computational/Memory Bottleneck: The FramePack Structure

- Reason: Standard Transformers face quadratic computational complexity with respect to sequence length in their attention mechanism. This makes feeding a large number of historical frames directly into the model computationally infeasible, preventing the model from "remembering" or utilizing distant past information.

- Technical Solution (FramePack): The core idea is Progressive Context Compression. Based on the premise that temporally closer frames are generally more relevant, FramePack compresses historical frames based on their "importance" (typically temporal distance) before they enter the Transformer. Specifically, older frames are processed using larger 3D patchify kernel sizes and strides, reducing the number of tokens representing each distant frame.

- Effect: This compression scheme causes the total number of tokens (context length) fed into the Transformer to follow a converging geometric series. Even as the actual number of input frames (

T) grows indefinitely, the effective context length (L) approaches a fixed upper bound (e.g.,L = (S + λ/(λ-1)) * Lfas per the paper's formula). This breaks the linear scaling of context length with video duration, making the computational bottleneck per prediction step independent of the total video length (similar to image generation). Consequently, the model can efficiently process and leverage a much longer history.

-

Addressing the Error Accumulation Bottleneck: Anti-drifting Sampling Strategies

- Reason: In purely causal (past-to-future) generation, small errors in generating one frame are fed as input for the next, leading to compounding errors that significantly degrade video quality over time (drifting or exposure bias).

- Technical Solution: The paper proposes sampling methods that introduce Bi-directional Context to break this error accumulation chain. Key strategies include:

- Endpoints First: Generating the start and end frames/sections of the video first, then recursively filling the gaps. These endpoints act as high-quality anchors, guiding subsequent generation and preventing unconstrained drift.

- Inverted Temporal Order: Generating frames sequentially from the end of the video backward towards the beginning. This is particularly effective for image-to-video tasks, as the model continuously refines its output towards a known, high-quality starting frame (the user's input), providing a strong target at each step and suppressing error propagation.

- Effect: By incorporating information from the future (or a fixed high-quality target), these strategies prevent the unidirectional, unchecked propagation of errors, thus maintaining higher visual quality throughout longer generated sequences.

3. Comparison with Other Approaches for Long Video Generation

FramePack's approach to generating longer videos differs significantly from other methods:

- Compared to Full Video Models (e.g., Sora-like): Full video models process entire chunks simultaneously, incurring massive computational costs that scale poorly with duration. FramePack uses a step-wise autoregressive approach but manages the cost per step via compression, offering potentially better scalability in length, though requiring explicit mechanisms (FramePack structure, sampling) to ensure long-range consistency.

- Compared to Standard Autoregressive Models: Standard models suffer directly from the computational bottleneck and severe drifting, limiting practical video length. FramePack provides direct solutions to both problems through context compression (computation/memory) and anti-drifting sampling (error accumulation).

- Compared to Anchor/Planning-Based Methods: Methods using fixed anchors rely on anchor quality and placement. FramePack's anti-drifting sampling (especially reverse generation) offers a more dynamic, integrated strategy for maintaining quality and consistency without necessarily relying on external planning.

- Compared to Attention Optimization Methods (e.g., Sparse Attention, KV Caching): These aim to make the Transformer itself more efficient for long sequences. FramePack operates before the attention layers by reducing the number of input tokens for older frames and specifically addresses drift via sampling. The approaches could potentially be complementary.

- Compared to Drift-Only Mitigation Methods (e.g., Noisy History): These often interrupt error propagation by sacrificing some historical information fidelity, potentially worsening the forgetting problem. FramePack aims to mitigate drift while simultaneously preserving access to a longer effective history through its compression mechanism.

In summary, FramePack achieves longer video generation by uniquely combining an efficient context compression mechanism to handle extensive history with specific non-causal sampling strategies to counteract error accumulation, offering a distinct and comprehensive solution compared to other existing approaches.